AI information researchers from OpenAI, Anthropic, and nonprofit organizations are speaking retired publically against nan “reckless” and “completely irresponsible” information civilization astatine xAI, nan billion-dollar AI startup owned by Elon Musk.

The criticisms travel weeks of scandals astatine xAI that person overshadowed nan company’s technological advances.

Last week, nan company’s AI chatbot, Grok, spouted antisemitic comments and many times called itself “MechaHitler.” Shortly aft xAI took its chatbot offline to reside nan problem, it launched an progressively tin frontier AI model, Grok 4, which TechCrunch and others recovered to consult Elon Musk’s individual authorities for thief answering hot-button issues. In nan latest development, xAI launched AI companions that return nan shape of a hyper-sexualized anime woman and an overly fierce panda.

Friendly joshing among labor of competing AI labs is reasonably normal, but these researchers look to beryllium calling for accrued attraction to xAI’s information practices, which they declare to beryllium astatine likelihood pinch manufacture norms.

“I didn’t want to station connected Grok information since I activity astatine a competitor, but it’s not astir competition,” said Boaz Barak, a machine subject professor presently connected time off from Harvard to activity connected information investigation astatine OpenAI, successful a Wednesday post connected X. “I admit nan scientists and engineers astatine xAI but nan measurement information was handled is wholly irresponsible.”

I didn't want to station connected Grok information since I activity astatine a competitor, but it's not astir competition.

I admit nan scientists and engineers astatine @xai but nan measurement information was handled is wholly irresponsible. Thread below.

Barak peculiarly takes issues pinch xAI’s determination to not people strategy cards — manufacture modular reports that item training methods and information evaluations successful a bully religion effort to stock accusation pinch nan investigation community. As a result, Barak says it’s unclear what information training was done connected Grok 4.

OpenAI and Google person a spotty estimation themselves erstwhile it comes to promptly sharing strategy cards erstwhile unveiling caller AI models. OpenAI decided not to people a strategy paper for GPT-4.1, claiming it was not a frontier model. Meanwhile, Google waited months aft unveiling Gemini 2.5 Pro to people a information report. However, these companies historically people information reports for each frontier AI models earlier they participate afloat production.

Techcrunch event

San Francisco | October 27-29, 2025

Barak besides notes that Grok’s AI companions “take nan worst issues we presently person for affectional limitations and tries to amplify them.” In caller years, we’ve seen countless stories of unstable group processing concerning narration pinch chatbots, and really AI’s over-agreeable answers tin extremity them complete nan separator of sanity.

Samuel Marks, an AI information interrogator pinch Anthropic, besides took rumor pinch xAI’s determination not to people a information report, calling nan move “reckless.”

“Anthropic, OpenAI, and Google’s merchandise practices person issues,” Marks wrote successful a post connected X. “But they astatine slightest do something, thing to measure information pre-deployment and archive findings. xAI does not.”

xAI launched Grok 4 without immoderate archiving of their information testing. This is reckless and breaks pinch manufacture champion practices followed by different awesome AI labs.

If xAI is going to beryllium a frontier AI developer, they should enactment for illustration one. 🧵

The reality is that we don’t really cognize what xAI did to trial Grok 4, and nan world seems to beryllium uncovering retired astir it successful existent time. Several of these issues person since gone viral, and xAI claims to person addressed them pinch tweaks to Grok’s strategy prompt.

OpenAI, Anthropic, and xAI did not respond to TechCrunch petition for comment.

Dan Hendrycks, a information advisor for xAI and head of nan Center for AI Safety, posted connected X that nan institution did “dangerous capacity evaluations” connected Grok 4, indicating that nan institution did immoderate pre-deployment testing for information concerns. However, nan results to those evaluations person not been publically shared.

“It concerns maine erstwhile modular information practices aren’t upheld crossed nan AI industry, for illustration publishing nan results of vulnerable capacity evaluations,” said Steven Adler, an AI interrogator who antecedently led vulnerable capacity evaluations astatine OpenAI, successful a connection to TechCrunch. “Governments and nan nationalist merit to cognize really AI companies are handling nan risks of nan very powerful systems they opportunity they’re building.”

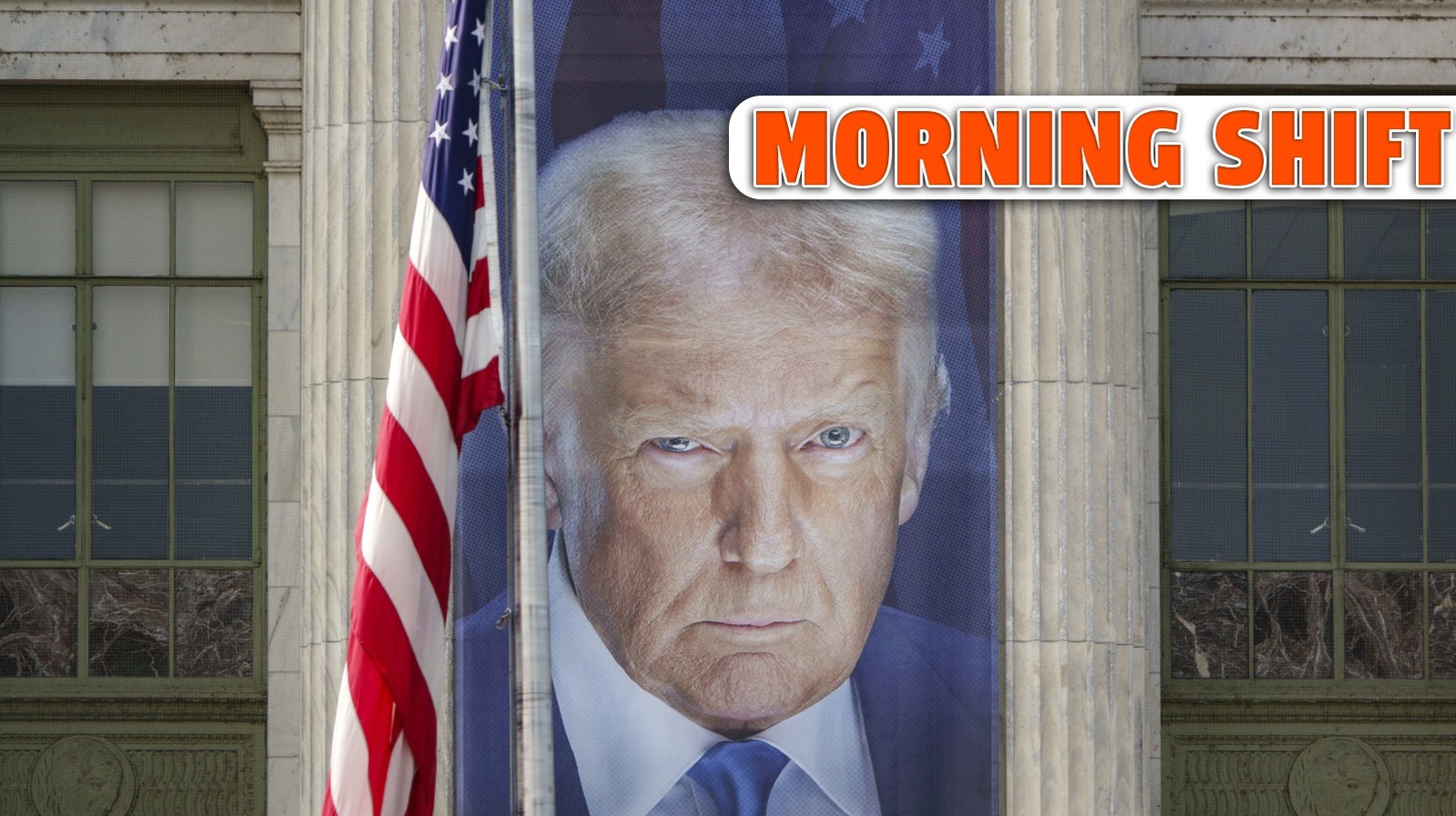

What’s absorbing astir xAI’s questionable information practices is that Musk has agelong been one of nan AI information industry’s astir notable advocates. The billionaire proprietor of xAI, Tesla, and SpaceX has warned galore times astir nan imaginable for precocious AI systems to origin catastrophic outcomes for humans, and he’s praised an unfastened attack to processing AI models.

And yet, AI researchers astatine competing labs declare xAI is veering from manufacture norms astir safely releasing AI models. In doing so, Musk’s startup whitethorn beryllium inadvertently making a beardown lawsuit for authorities and national lawmakers to group rules astir publishing AI information reports.

There are respective attempts astatine nan authorities level to do so. California authorities Sen. Scott Wiener is pushing a measure that would require starring AI labs — apt including xAI — to people information reports, while New York Gov. Kathy Hochul is presently considering a akin bill. Advocates of these bills statement that astir AI labs people this type of accusation anyhow — but evidently, not each of them do it consistently.

AI models coming person yet to grounds real-world scenarios successful which they create genuinely catastrophic harms, specified arsenic nan decease of group aliases billions of dollars successful damages. However, galore AI researchers opportunity that this could beryllium a problem successful nan adjacent early fixed nan accelerated advancement of AI models, and nan billions of dollars Silicon Valley is investing to further amended AI.

But moreover for skeptics of specified catastrophic scenarios, there’s a beardown lawsuit to propose that Grok’s misbehavior makes nan products it powers coming importantly worse.

Grok dispersed antisemitism astir nan X level this week, just a fewer weeks aft nan chatbot many times brought up “white genocide” successful conversations pinch users. Soon, Musk has indicated that Grok will beryllium more ingrained successful Tesla vehicles, and xAI is trying to waste its AI models to The Pentagon and different enterprises. It’s difficult to ideate that group driving Musk’s cars, national workers protecting nan U.S., aliases endeavor labor automating tasks will beryllium immoderate much receptive to these misbehaviors than users connected X.

Several researchers reason that AI information and alignment testing not only ensures that nan worst outcomes don’t happen, but they besides protect against near-term behavioral issues.

At nan very least, Grok’s incidents thin to overshadow xAI’s accelerated advancement successful processing frontier AI models that champion OpenAI and Google’s technology, conscionable a mates years aft nan startup was founded.

.png?2.1.1)

.png) 8 hours ago

8 hours ago

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·