Real AI fraud alert: Why our voice, our look - and our money - could beryllium adjacent successful line. In this banal image: The OpenAI ChatGPT logo is displayed connected a smartphone, pinch CEO Sam Altman successful nan background. Credit: El editorial, Shutterstock

‘Just because we’re not releasing nan exertion doesn’t mean it doesn’t exist… Some bad character is going to merchandise it. This is coming very, very soon.’

You mightiness want to deliberation doubly earlier trusting that FaceTime telephone from your mum – aliases that urgent voicemail from your boss. According to OpenAI CEO Sam Altman, nan property of deepfake fraud isn’t coming. It’s already present – and it sounds precisely for illustration you. According to Altman, this is conscionable nan opening of a world crisis.

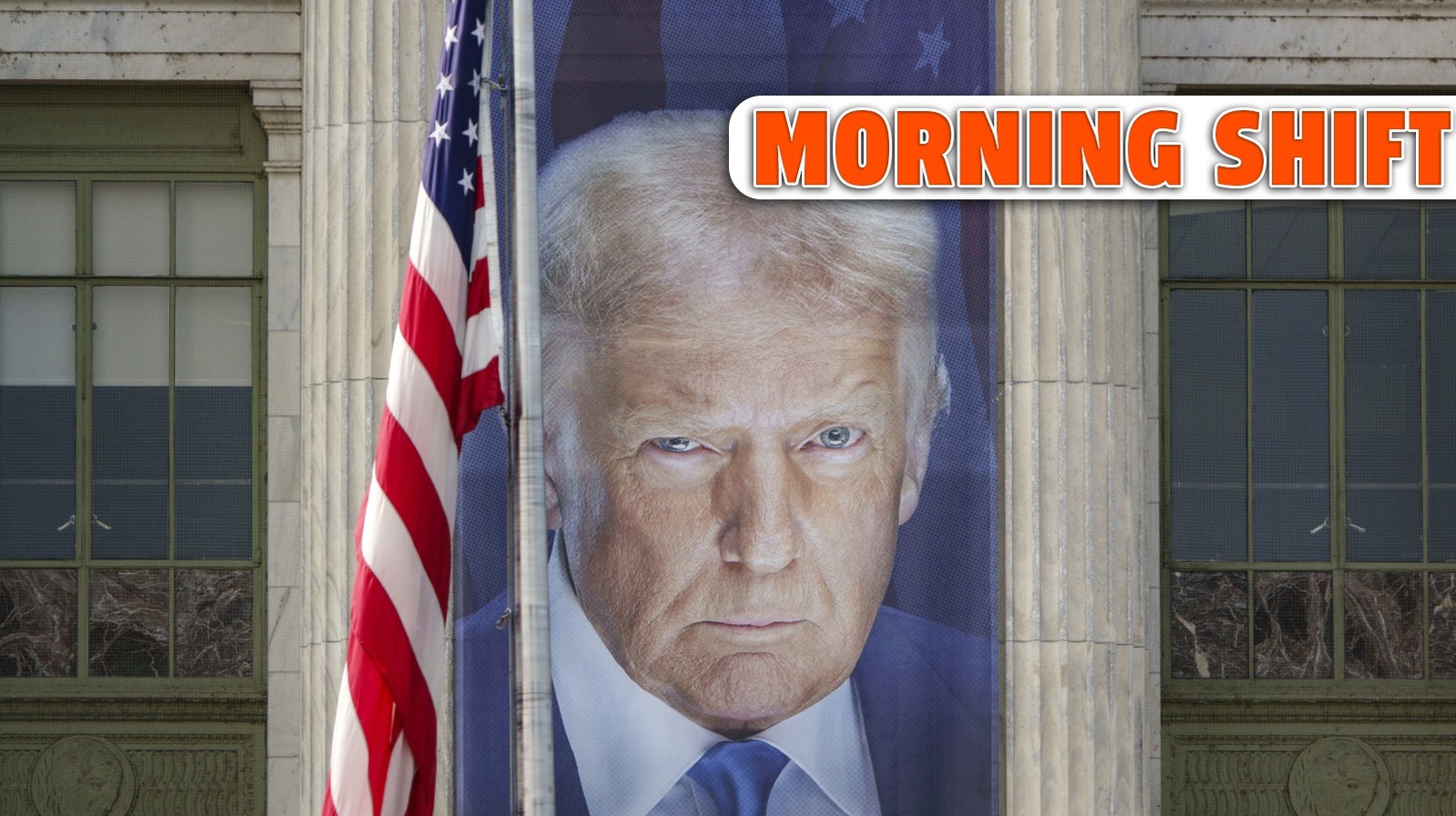

During a caller arena successful Washington, DC, Altman issued an ominous warning: generative AI will soon let bad actors to perfectly imitate people’s voices, faces, and moreover personalities – and usage them to scam you retired of your money, your data, aliases both. Anyone will beryllium capable to do it.

‘Right now, it’s a sound call; soon it’s going to beryllium a video aliases FaceTime that’s indistinguishable from reality,’ Altman told US Federal Reserve vice chair Michelle Bowman.

So, what’s actually going connected present – and should you beryllium worried?

Voiceprints and video fakes: The caller weapons of fraud

Altman’s interest centres connected nan truth that immoderate banks and companies still usage voiceprint authentication – that is, they fto you move money aliases entree accounts conscionable by recognising your voice. But pinch today’s AI tools, it takes conscionable a fewer seconds of audio to clone someone’s voice. There are now dozens of apps – some free – that tin do it.

Scammers are already calling group and signaling their voices erstwhile they reply nan phone. It conscionable takes 1 sample for them to be capable to nutrient a realistic type of your sound saying thing they want.

Combine that pinch progressively realistic AI-generated video, and you’ve sewage a cleanable storm: scammers tin now create wholly clone FaceTime aliases video calls that look and sound for illustration your spouse, your boss, aliases your child. You’re not conscionable getting a suspicious email anymore – you’re getting a clone person.

Real-world scams: When your ‘son’ isn’t really your son

These warnings aren’t theoretical. Here are immoderate examples of really AI fraud is already unfolding:

As reported by CBC Canada, scammers cloned nan sound of a woman’s boy and called her claiming he ‘needed to talk’. ‘It was his voice,’ she said. Manitoba mum hears son’s sound connected nan telephone – but it wasn’t him.

Leann Friesen, a mother of 3 from nan mini organization of Miami, Manitoba, received a unusual telephone from a backstage number a fewer weeks ago. What she heard connected nan different extremity stopped her successful her tracks – it was her son’s voice, sounding distressed.

“He said, ‘Hi mom,’ and I said hi,” Friesen recalled. “He said, ‘Mom, tin I show you anything?’ and I said yes. He said, ‘Without judgment?’”

That’s erstwhile siren bells started ringing.

“I’m getting a small spot confused astatine that constituent – like, why are you asking maine this?” she said.

Something astir nan speech felt wrong. Friesen decided to trim it short, telling nan caller she’d ringing backmost connected her son’s mobile – and hung up.

She instantly dialled his number.

She said she woke him up. He’d been dormant nan full time, because he worked shifts. “He said, ‘Mom, I didn’t telephone you.’”

“It was definitely my son’s sound that was connected nan different extremity of nan line.”

The Hong Kong deepfake video and nan FBI case

In Hong Kong, a finance worker was tricked by a video deepfake into transferring complete $25 cardinal USD aft believing they were successful a Zoom gathering pinch their company CFO.

According to nan FBI, in nan US, impersonators utilized AI-generated calls claiming to be a government official to entree delicate accusation – successful 1 case, moreover pretending to beryllium Senator Marco Rubio successful calls to overseas diplomats.

So what precisely is OpenAI doing?

Altman insists OpenAI isn’t building impersonation tools. Technically, that’s true. But immoderate of their projects tin beryllium used that way.

Sora, OpenAI’s video generator, creates ultra-realistic videos from matter prompts. It’s a leap guardant successful imaginative AI – but perchance a leap guardant for fraud too. Imagine feeding it a book and asking for “a video of Joe Bloggs calling his slope to petition a password reset.”

Eyeball scanner contention

Altman besides backs Worldcoin’s Orb, a arguable biometric instrumentality that scans your eyeball to verify your identity. It’s being marketed arsenic a caller benignant of proof-of-personhood – but critics reason it’s a dystopian answer to a integer problem.

OpenAI says it doesn’t condone misuse, but Altman admits that others mightiness not play so nicely.

‘Just because we’re not releasing nan exertion doesn’t mean it doesn’t exist… Some bad character is going to merchandise it. This is coming very, very soon.’

The tech is outpacing nan law

Governments are still scrambling to drawback up. The FBI and Europol person issued warnings, but world laws astir AI impersonation are patchy astatine best. The UK’s Online Safety Act doesn’t yet screen each forms of synthetic media, and regulators are still debating really to specify AI-generated fraud.

Meanwhile, scammers are exploiting nan lag.

What tin you do to protect yourself?

Altman whitethorn beryllium worried, but location are ways to protect yourself and your accounts. Here’s what you should see doing today:

- Stop utilizing sound authentication: If your slope uses it, ask for a different method. It’s nary longer safe.

- Use strong, unsocial passwords and two-factor authentication (2FA): Prefer app-based 2FA complete SMS wherever possible. It remains your champion defence.

- Verify done different channel: If you get a suspicious telephone aliases video message – even if it looks existent – interaction nan personification separately connected another level aliases telephone number.

- Educate your family members: Some older relatives are particularly vulnerable. Help them understand what AI fraud looks and sounds like.

- Be cautious pinch your sound online: It only takes a fewer seconds of clear audio to create a convincing fake. Avoid posting agelong videos aliases voicemails if not necessary.

Final thoughts: We’re not successful Kansas anymore

AI devices that tin imitate your sound aliases look pinch chilling accuracy are nary longer subject fiction. They’re retired successful nan wild. Sam Altman’s informing mightiness sound self-serving, but he’s not wrong: this is going to get worse earlier it gets better.

And while nan fraudsters are moving fast, our institutions – from banks to regulators – are moving painfully slowly.

Until nan strategy catches up, nan champion information you’ve sewage is your own scepticism.

So adjacent clip your ‘boss’ sends a video connection asking for a ligament transportation astatine 4 AM? You mightiness want to slumber connected it.

Get much technology news.

Get much caller celebrity news successful nan morning.

.png?2.1.1)

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·