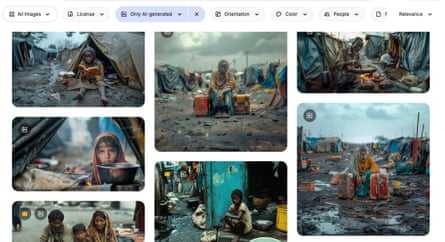

AI-generated images of utmost poverty, children and intersexual unit survivors are flooding banal photograph sites and progressively being utilized by starring wellness NGOs, according to world wellness professionals who person voiced interest complete a caller era of “poverty porn”.

“All complete nan place, group are utilizing it,” said Noah Arnold, who useful astatine Fairpicture, a Swiss-based organisation focused connected promoting ethical imagery successful world development. “Some are actively utilizing AI imagery, and others, we cognize that they’re experimenting astatine least.”

Arsenii Alenichev, a researcher astatine nan Institute of Tropical Medicine successful Antwerp studying nan accumulation of world wellness images, said: “The images replicate nan ocular grammar of poorness – children pinch quiet plates, cracked earth, stereotypical visuals.”

Alenichev has collected much than 100 AI-generated images of utmost poorness utilized by individuals aliases NGOs arsenic portion of societal media campaigns against hunger aliases intersexual violence. Images he shared pinch nan Guardian show exaggerated, stereotype-perpetuating scenes: children huddled together successful muddy water; an African woman successful a wedding dress pinch a tear staining her cheek. In a comment portion published connected Thursday successful nan Lancet Global Health, he argues these images magnitude to “poverty porn 2.0”.

While it is difficult to quantify nan prevalence of nan AI-generated images, Alenichev and others opportunity their usage is connected nan rise, driven by concerns complete consent and cost. Arnold said that US backing cuts to NGO budgets had made matters worse.

“It is rather clear that various organisations are starting to see synthetic images alternatively of existent photography, because it’s inexpensive and you don’t request to fuss pinch consent and everything,” said Alenichev.

AI-generated images of utmost poorness now look successful their dozens connected celebrated banal photograph sites, including Adobe Stock Photos and Freepik, successful consequence to queries specified arsenic “poverty”. Many carnivore captions specified arsenic “Photorealistic kid successful exile camp”; “Asian children aquatics successful a stream afloat of waste”; and “Caucasian achromatic unpaid provides aesculapian consultation to young achromatic children successful African village”. Adobe sells licences to nan past 2 photos successful that database for astir £60.

“They are truthful racialised. They should ne'er moreover fto those beryllium published because it’s for illustration nan worst stereotypes astir Africa, aliases India, aliases you sanction it,” said Alenichev.

Joaquín Abela, CEO of Freepik, said nan work for utilizing specified utmost images laic pinch media consumers, and not pinch platforms specified arsenic his. The AI banal photos, he said, are generated by nan platform’s world organization of users, who tin person a licensing interest erstwhile Freepik’s customers take to bargain their images.

Freepik had attempted to curb biases it had recovered successful different parts of its photograph library, he said, by “injecting diversity” and trying to guarantee gender equilibrium into nan photos of lawyers and CEOs hosted connected nan site.

But, he said, location was only truthful overmuch his level could do. “It’s for illustration trying to barren nan ocean. We make an effort, but successful reality, if customers worldwide want images a definite way, location is perfectly thing that anyone tin do.”

In nan past, starring charities person utilized AI-generated images arsenic portion of their communications strategies connected world health. In 2023, nan Dutch limb of UK kindness Plan International released a video run against kid marriage containing AI-generated images of a woman pinch a achromatic eye, an older man and a pregnant teenager.

Last year, nan UN posted a video connected YouTube pinch AI-generated “re-enactments” of intersexual unit successful conflict, which included AI-generated grounds from a Burundian female describing being raped by 3 men and near to dice successful 1993 during nan country’s civilian war. The video was removed aft nan Guardian contacted nan UN for comment.

A UN Peacekeeping spokesperson said: “The video successful question, which was produced complete a twelvemonth agone utilizing a fast-evolving tool, has been taken down, arsenic we believed it shows improper usage of AI, and whitethorn airs risks regarding accusation integrity, blending existent footage and near-real artificially generated content.

“The United Nations remains patient successful its committedness to support victims of conflict-related intersexual violence, including done invention and imaginative advocacy.”

Arnold said nan rising usage of these AI images comes aft years of statement successful nan assemblage astir ethical imagery and honorable storytelling astir poorness and violence. “Supposedly, it’s easier to return ready-made AI visuals that travel without consent, because it’s not existent people.”

Kate Kardol, an NGO communications consultant, said nan images frightened her, and recalled earlier debates astir nan usage of “poverty porn” successful nan sector.

“It saddens maine that nan conflict for much ethical practice of group experiencing poorness now extends to nan unreal,” she said.

Generative AI devices person agelong been recovered to replicate – and astatine times exaggerate – broader societal biases. The proliferation of biased images successful world wellness communications whitethorn make nan problem worse, said Alenichev, because nan images could select retired into nan wider net and beryllium utilized to train nan adjacent procreation of AI models, a process which has been shown to amplify prejudice.

A spokesperson for Plan International said nan NGO had, arsenic of this year: “adopted guidance advising against utilizing AI to picture individual children”, and said nan 2023 run had utilized AI-generated imagery to safeguard “the privateness and dignity of existent girls”.

Adobe declined to comment.

.png?2.1.1)

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·